The brain retains and uses organized information, helping us recognize visual stimuli based on past experiences.

The study has been published in the journal Neuron (1✔ ✔Trusted Source

Visual experience reduces the spatial redundancy between cortical feedback inputs and primary visual cortex neurons

Go to source).

The study indicates that neurons are interconnected in a manner that links concept which seem distinct. This neural connectivity may play a vital role in improving the brain's capacity to anticipate visual stimuli based on previous experiences, thereby helping our understanding of how this mechanism goes wrong in mental health disorders.

‘Did You Know?

Brain constitutes about 25% of cholesterol present in the body and it is critical for brain cells. #humanbrain #neurons #medindia’

Brain constitutes about 25% of cholesterol present in the body and it is critical for brain cells. #humanbrain #neurons #medindia’

Advertisement

How the Brain Integrates Real-World Experience with Prior Knowledge

As time progresses, our brain constructs a structured hierarchy of knowledge, associating more complex concepts with the fundamental characteristics that define them. For example, we come to understand that cabinets are equipped with drawers and that Dalmatian dogs possess black-and-white spots, rather than the reverse. This intricate network influences our expectations and perceptions of the world, enabling us to recognize what we observe based on context and prior experiences.“Take an elephant”, says Leopoldo Petreanu, senior author of the la Caixa-funded study. “Elephants are associated with lower-order attributes such as colour, size, and weight, as well as higher-order contexts like jungles or safaris. Connecting concepts helps us understand the world and interpret ambiguous stimuli. If you’re on a safari, you may be more likely to spot an elephant behind the bushes than you would otherwise. Similarly, knowing it’s an elephant makes you more likely to perceive it as grey even in the dim light of dusk. But where in the fabric of the brain is this prior knowledge stored, and how is it learned?”.

Advertisement

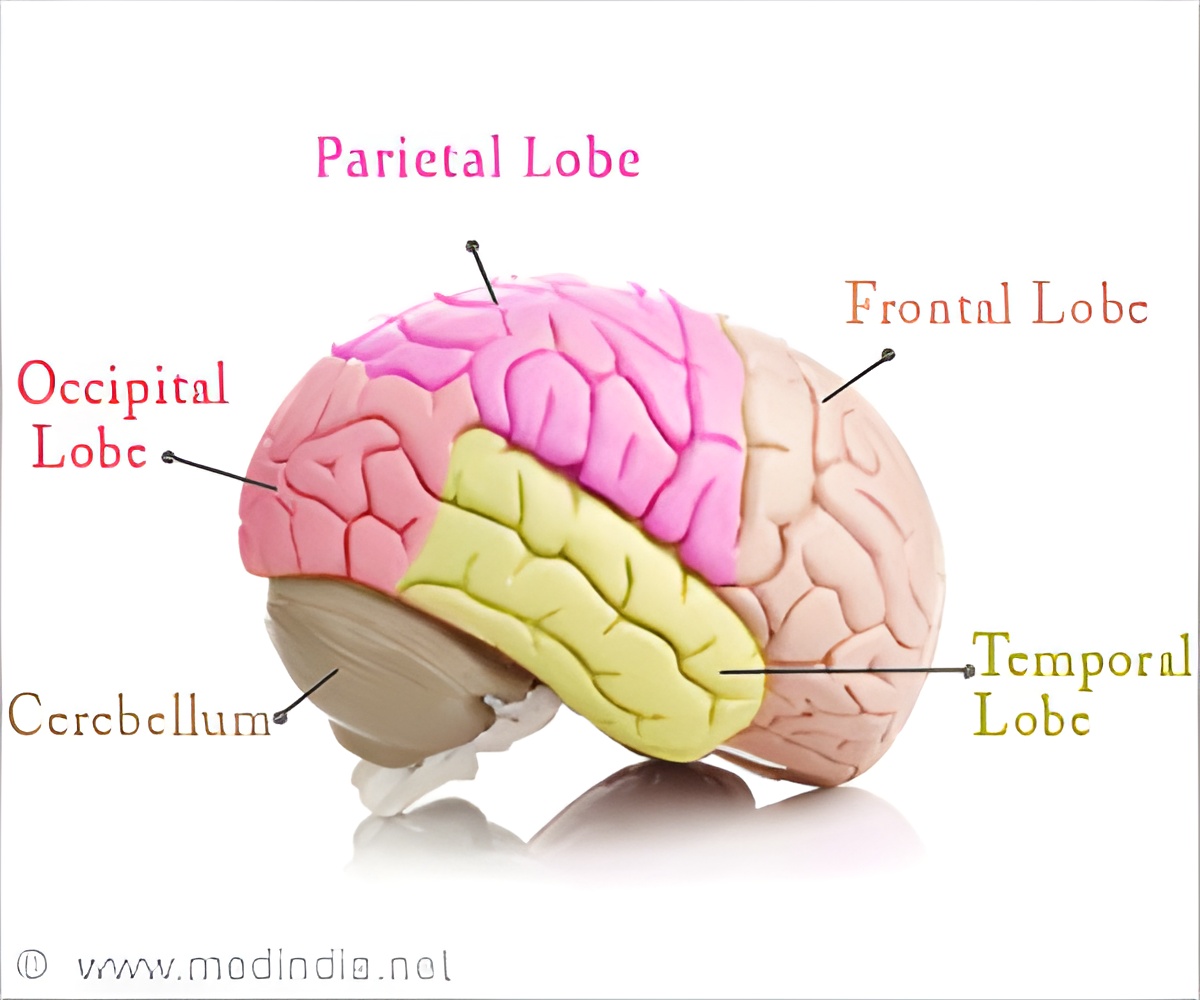

How the Neurons Communicate Feedback to Predict the Visual Stimuli

The visual system of the brain is composed of a network of areas that collaborate, with lower regions responsible for processing basic details such as small areas of space, colors, and edges; while higher regions deal with more intricate concepts, including larger areas of space, animals, and faces. Cell neurons in the higher regions provide "feedback" connections to the lower regions, enabling them to learn and integrate real-world relationships shaped by experience. For example, cells encoding an “elephant” might send feedback to cells processing features like “grey”, “big” and “heavy”. Consequently, the researchers aimed to explore how visual experiences affect the organization of these feedback projections, the functional significance of which remains largely unclear.“We wanted to understand how these feedback projections store information about the world”, says Rodrigo Dias, one of the study’s first authors. “To do this, we examined the effects of visual experience on feedback projections to a lower visual area called V1 in mice. We raised two groups of mice differently: one in a normal environment with regular light exposure, and the other in darkness. We then observed how the feedback connections, and cells they target in V1, responded to different regions of the visual field”.

In mice raised in darkness, the feedback connections and V1 cells directly below them both represented the same areas of visual space. First author Radhika Rajan picks up the story, “It was amazing to see how well the spatial representations of higher and lower areas matched up in the dark-reared mice. This suggests that the brain has an inherent, genetic blueprint for organising these spatially aligned connections, independent of visual input”. However, in normally-reared mice, these connections were less precisely matched, and more feedback inputs conveyed information from surrounding areas of the visual field.

Rajan continues, “We found that with visual experience, feedback provides more contextual and novel information, enhancing the ability of V1 cells to sample information from a broader area of the visual scene”. This effect depended on the origin within the higher visual area: feedback projections from deeper layers were more likely to convey surround information compared to those from superficial layers.

Moreover, the team discovered that in normally-reared mice, deep-layer feedback inputs to V1 become organised according to the patterns they “prefer” to see, such as vertical or horizontal lines. “For instance”, Dias says, “inputs that prefer vertical lines avoid sending surround information to areas located along the vertical direction. In contrast, we found no such bias in connectivity in dark-reared mice”.

“This suggests that visual experience plays a crucial role in fine-tuning feedback connections and shaping the spatial information transmitted from higher to lower visual areas”, notes Petreanu. “We developed a computational model that shows how experience leads to a selection process, reducing connections between feedback and V1 cells whose representations overlap too much. This minimises redundancy, allowing V1 cells to integrate a more diverse range of feedback”.

Advertisement

How This Brain Mechanism Can Help in Mental Health Disorders

Perhaps counterintuitively, the brain might encode learned knowledge by connecting cells that represent unrelated concepts, and that are less likely to be activated together based on real-world patterns. This could be an energy-efficient way to store information, so that when encountering a novel stimulus, like a pink elephant, the brain’s preconfigured wiring maximises activation, enhancing detection and updating predictions about the world.Identifying this brain interface where prior knowledge combines with new sensory information could be valuable for developing interventions in cases where this integration process malfunctions.

As Petreanu concludes, “Such imbalances are thought to occur in conditions like autism and schizophrenia. In autism, individuals may perceive everything as novel because prior information isn’t strong enough to influence perception. Conversely, in schizophrenia, prior information could be overly dominant, leading to perceptions that are internally generated rather than based on actual sensory input. Understanding how sensory information and prior knowledge are integrated can help address these imbalances”.

Reference:

- Visual experience reduces the spatial redundancy between cortical feedback inputs and primary visual cortex neurons - (https://www.cell.com/neuron/abstract/S0896-6273(24)00531-2)

Source-Eurekalert