Scientists are reporting that a man with locked-in syndrome has spoken three different vowel sounds using a voice synthesiser controlled by an implant deep in his brain.

Scientists are reporting that a man with locked-in syndrome has "spoken" three different vowel sounds using a voice synthesiser controlled by an implant deep in his brain. This device could revolutionize speech in paralyzed people.

Scientists have said that if they could add more sounds to the repertoire of brain signals the implant can translate, such systems could be a scientific breakthrough in communication for such patients."We're very optimistic that the next patient will be able to say words," New Scientist quoted Frank Guenther, a neuroscientist at Boston University who led the study as saying.

Eric Ramsey, 26, has locked-in syndrome, in which people are unable to move a muscle but are fully conscious.

Guenther said that while a brain implant with invasive surgery could be drastic, but lifting signals directly from neurons may be the only way that locked-in people like Ramsey, or those with advanced forms of ALS, a neurodegenerative disease, will ever be able to communicate quickly and naturally.

Devices that use brain signals captured by scalp electrodes are slow, allowing typing on a keyboard at a rate of one to two words per minute.

"Our approach has the potential for providing something along the lines of conventional speech as opposed to very slow typing," said Guenther.

Advertisement

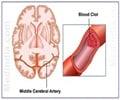

Ramsey, who suffered a brain-stem stroke at the age of 16, has an electrode implanted into a brain area that plans the movements of the vocal cords and tongue that underlie speech.

Advertisement

They used these predictions to translate the firing patterns of several dozen brain cells in Ramsey's brain into the acoustical building blocks of speech.

"It's a very subtle code; you're looking over many neurons. You don't have one neuron that represents 'aaa' and another that represents 'eee'. It's way messier than that," said Guenther.

Next, Guenther's team provided Ramsey with audio feedback of the computer's interpretation of his neurons, allowing him to tune his thoughts to hit a specific vowel.

Over 25 trials across many months, Ramsey improved from hitting 45 per cent of vowels to 70 per cent.

While the ability to produce three distinct vowels from brain signals won't allow for much communication, but Guenther has said that technological improvements should have a next-generation decoder producing whole words in three to five years.

This next device will read from far more neurons and so should be able to extract the brain signals underlying consonants, he said.

The team plan to have it controlled by a laptop, so people can practise speaking at home as much as they like.

The study has been published in the journal PLoS One.

Source-ANI

RAS