Mind-reading algorithm uses electroencephalography (EEG) data to reconstruct images of what people perceive based on their brain activity.

‘EEG, an inexpensive method to analyze brain’s activity can now be used to reconstruct images based on how our brains perceive faces’

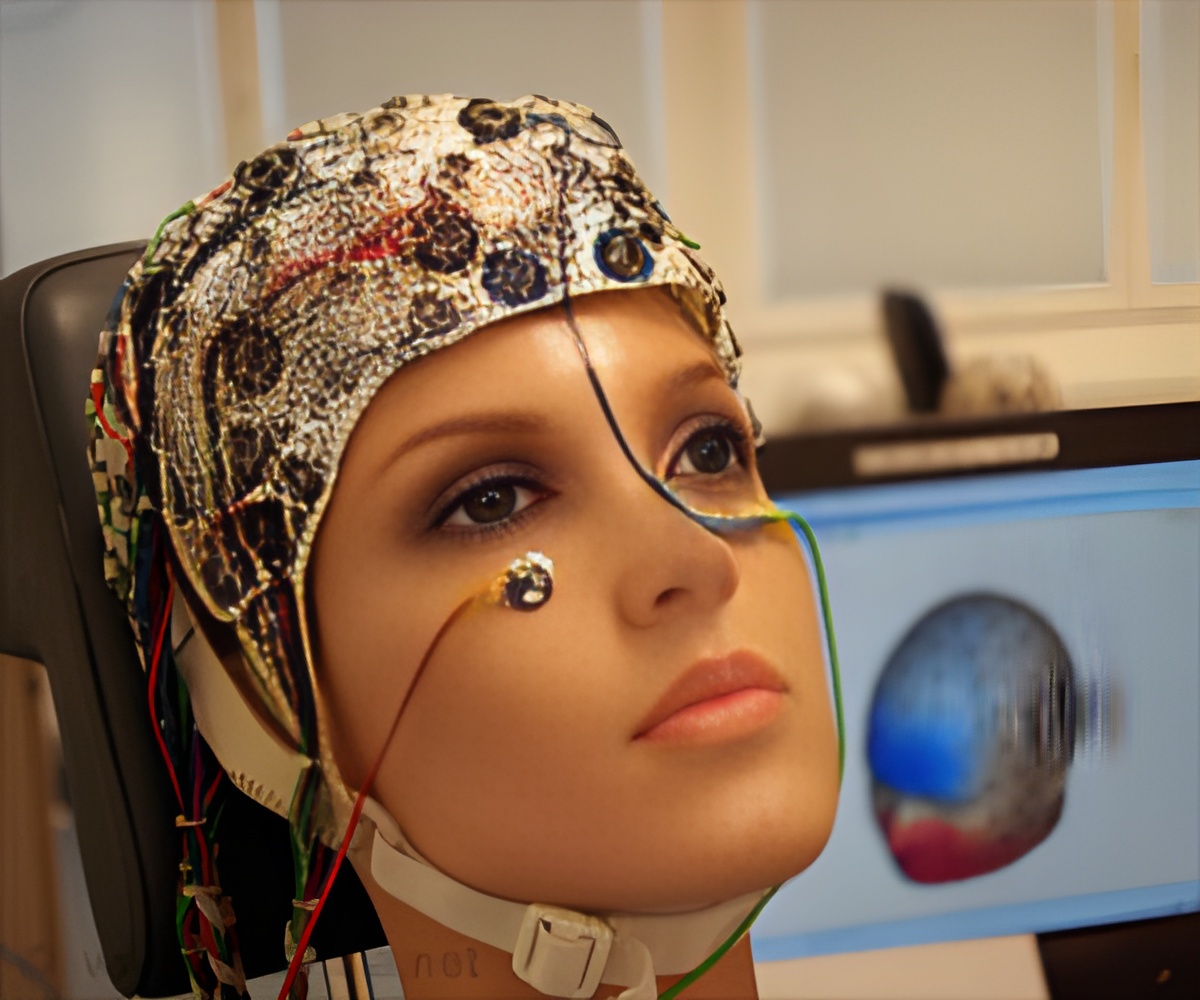

"When we see something, our brain creates a mental percept, which is essentially a mental impression of that thing. We were able to capture this percept using EEG to get a direct illustration of what's happening in the brain during this process," says Nemrodov. For the study, test subjects hooked up to EEG equipment were shown images of faces. Their brain activity was recorded and then used to digitally recreate the image in the subject's mind using a technique based on machine learning algorithms.

It's not the first time scientists have been able to reconstruct images based on visual stimuli using neuroimaging techniques. The current method was pioneered by Nestor who successfully reconstructed facial images from functional magnetic resonance imaging (fMRI) data in the past, but this is the first time EEG has been used.

And while techniques like fMRI - which measures brain activity by detecting changes in blood flow - can grab finer details of what's going on in specific areas of the brain, EEG has greater practical potential given that it's more common, portable, and inexpensive by comparison. EEG also has greater temporal resolution, meaning it can measure with detail how a percept develops in time right down to milliseconds, explains Nemrodov.

"fMRI captures activity at the time scale of seconds, but EEG captures activity at the millisecond scale. So we can see with very fine detail how the percept of a face develops in our brain using EEG," he says. In fact, the researchers were able to estimate that it takes our brain about 170 milliseconds (0.17 seconds) to form a good representation of a face we see.

Advertisement

In terms of next steps, work is currently underway in Nestor's lab to test how image reconstruction based on EEG data could be done using memory and applied to a wider range of objects beyond faces. But it could eventually have wide-ranging clinical applications as well.

Advertisement

"It could also have forensic uses for law enforcement in gathering eyewitness information on potential suspects rather than relying on verbal descriptions provided to a sketch artist."

The research, which will be published in the journal eNeuro, is funded by the Natural Sciences and Engineering Research Council of Canada (NSERC) and by a Connaught New Researcher Award.

"What's really exciting is that we're not reconstructing squares and triangles but actual images of a person's face, and that involves a lot of fine-grained visual detail," adds Nestor.

"The fact we can reconstruct what someone experiences visually based on their brain activity opens up a lot of possibilities. It unveils the subjective content of our mind and it provides a way to access, explore and share the content of our perception, memory and imagination."

Source-Eurekalert