Neural computation scientists at Bonn University have created a software system that is hoped to improve the function of retinal implants significantly.

Neural computation scientists at Bonn University have created a software system that is hoped to improve the function of retinal implants significantly. With the aid of the software, the visual prosthesis "learns" to generate exactly those signals, which are expected and can be interpreted by the brain.

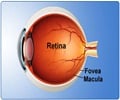

Nearly two dozens of patients in Germany and the U.S. have so far been implanted with a visual prosthesis. For this purpose, clinicians open the eye ball and attach a thin foil at the retina. Small protruding contacts reach neurons, which form the ganglion cell layer of the retina. These electrical stimulation contacts feed camera signals into the optic nerve. The camera may be attached to a frame of glasses and transmits its signals in a wireless fashion to the implant.Currently, the results do not meet the high expectations. "The camera generates electrical signals, which are almost useless for the brain," comments Rolf Eckmiller, a professor at the Department of Computer Science at Bonn University. "Our own system translates the camera signals into a language, which the central visual system in the brain understands". Unfortunately, the central visual system of each individual speaks a different dialect; this poses a difficulty for the translator function. For this reason, the computer- and neural scientist developed the "Retina Encoder" together with his graduate students Oliver Baruth and Rolf Schatten. At the Hanover Fair he is looking for commercial partners for the next step into clinical trials.

"In principle, the Retina Encoder is a computer program that converts the camera signals and forwards them to the retinal implant," explains Oliver Baruth the function. "The encoder learns in a continuous process how to change the camera output signal so that the respective patient can perceive the image." Currently, tests of the learning dialog process are being performed with normally sighted volunteers. The camera images are translated by the Retina Encoder and subsequently forwarded to a kind of "virtual central visual system." This simulation mimics the brain function for the interpretation of the converted camera data.

Initially, the Retina Encoder does not know which language the virtual central visual system speaks. Therefore the software translates the original picture - for example a ring - in different, randomly selected "dialects". This way, variations of the picture emerge, which are more or less similar to a ring. The volunteer sees these variations on a small screen that is integrated in a frame of glasses. By means of head movements, the person selects those variations that appear most similar to a ring. From these choices, the learning software draws conclusions how to improve the translation. In the next learning cycle, several new picture variations are being presented, which look already more similar to the original: during this process, the Retina Encoder becomes adapted step-by-step to the language of the virtual central visual system. In the current tests it works very well; however, the scientists have not yet tested their system in patients. The scientists emphasize that in principle, the Retina Encoder could be integrated in implanted visual prostheses within a few months.

In normally sighted humans, a kind of natural Retina Encoder is already integrated in the retina: specifically, four layers of nerve cells are positioned in front of the photoreceptor cells. "The retina is a transparent biocomputer," Eckmiller says. "It transforms the electrical signals of rod and cone photoreceptors into a complex signal." This signal reaches the brain via the optic nerve.

In the brain, the complex information is being decoded. The brain acquires the corresponding ability within the first months of life. During this time, the central visual system becomes individually adjusted to the retinal signals: the brain learns how to interpret the data from the optic nerve. In adults, however, who become blind later in life, the central visual system is already matured: it is not able anymore to change easily. "If the central visual system is not as flexible anymore, the artificial retina has to be," Eckmiller points out. “The artificial retina must learn to generate signals that are useful for the brain. And exactly this learning ability distinguishes our Retina Encoder”.

Advertisement

Source-Eurekalert

SRM